-- TYPE MISMATCH --------------- /Users/rgb/Projects/dreyeck/blog/elm-pages-starter-express/src/Wiki.elm This function cannot handle the argument sent through the (|>) pipe: 54| paragraph.text 55| |> parseWikiLink 56| |> List.map renderWikiLink ^^^^^^^^^^^^^^^^^^^^^^^ The argument is: Result (List DeadEnd) WikiLink But (|>) is piping it to a function that expects: List String

Das elektronische Schulbuch 📄 inf-schule ![]()

de.dreyeck.ch

⇒ Translate Link Words? – Web Words – page ⇒ 📄

because links are not portals through which we could see what to expect.

You will find two kinds of links in the text of wiki pages. Internal links go between wiki pages. External links go to other places on the internet.

⇒

Es gibt zwei Arten von Links im Text von Wiki-Seiten. Interne Links führen zwischen Wikiseiten. Externe Links führen zu anderen Stellen im Internet.

Der Server, der das clientseitige Javascript bereitstellt, mit dem Sie die Suche im föderierten Wiki starten, wird Origin-Server genannt. Er ist etwas Besonderes.

➜ Desktop rsync -avz ./Bildschirmaufnahme\ 2023-09-13\ um\ 10.11.43.mov rgb@wiki.ralfbarkow.ch:/home/rgb/.wiki/wiki.ralfbarkow.ch/assets/pages/2023-09-13/

pages/2023-09-13

Find pages sharing any of these items

SEARCH ANY ITEMS 014a48b53d8e7e09 560451b26f631758 a5863ae79bd552d9 bfacfa99a093ff32 e303cb53a4bee4a1

JSON for Ursprungsserver ⇒ JSON for Origin Server

Introduction to the elm/parser package page ![]()

How it works

If you have worked with JSON in Elm, the pattern will look familiar. We build a parser, a type that consists of smaller composable parsers. This parser is a value that describes the parsing rules. To actually perform the parsing, we call Parser.run parser str.

Parser.run then applies the appropriate parsers to the input string one by one, consuming zero or more characters at each step.

How about adding a parser (or more parsers) which gobble the paragraph.text up regardless of whether a wiki link was found?

Certainly, if you want to ensure that the parser consumes the entire paragraph text, regardless of whether a wiki link is found, you can achieve this by adding a parser that captures the remaining text. Here's an updated version of the code: (ChatGPT version from August 3)

-- Define a parser to capture the remaining text in a paragraph remainderParser : Parser String remainderParser = chompWhile (\c -> c /= '[') -- Modify the wikiLink parser to handle multiple occurrences of "" and "" wikiLink : Parser WikiLink wikiLink = succeed WikiLink |. symbol "[[" |= (getChompedString <| chompWhile (\c -> c /= ']')) |. symbol "]]" -- Combine the wikiLink parser and remainderParser wikiLinkOrRemainder : Parser (Either WikiLink String) wikiLinkOrRemainder = oneOf [ Left <$> wikiLink , Right <$> remainderParser ] -- Modify the parseWikiLink function to handle either a wikiLink or the remainder of the text parseWikiLink : String -> Result (List Parser.DeadEnd) (List (Either WikiLink String)) parseWikiLink str = Parser.run (zeroOrMore wikiLinkOrRemainder) str

In this updated code: 1. We introduce a remainderParser which will consume characters until it encounters a [.

2. We create a new parser wikiLinkOrRemainder using oneOf to handle either a wiki link or the remaining text.

3. The parseWikiLink function now returns a list of Either WikiLink String, where Left represents a wiki link, and Right represents the remaining text.

This approach ensures that even if a wiki link is not found, the parser will still consume the entire paragraph text. If a wiki link is found, it will be captured, but if not, the remaining text will be captured instead. (ChatGPT version from August 3)

oneOf

See section 4 (Parser) of the MiniLatex paper.

In this section, we discuss the combinators in the elm/parser library.

Regular expressions are quite confusing and difficult to use. This library provides a coherent alternative that handles more cases and produces clearer code. github ![]() , page

, page ![]()

Notable are the pipeline combinators (|.) and (|=) discussed below.

The parser is built from elementary parsers that recognize fixed strings, e.g., \begin{theorem}, whitespace, words, etc. Words are defined as strings not containing whitespace and not beginning with a reserved character such as a backslash or a dollar sign.

There are also special parsers such as

succeed : a → Parser a

which always succeeds and returns a value of type a.

Let's build a parser ! video ![]()

Note: Compare this with our parser for wiki links (reserved characters are doubled square brackets): code ![]()

link : Parser WikiLink link = succeed WikiLink |. symbol "[[" |= (getChompedString <| chompWhile (\c -> c /= ']')) |. symbol "]]"

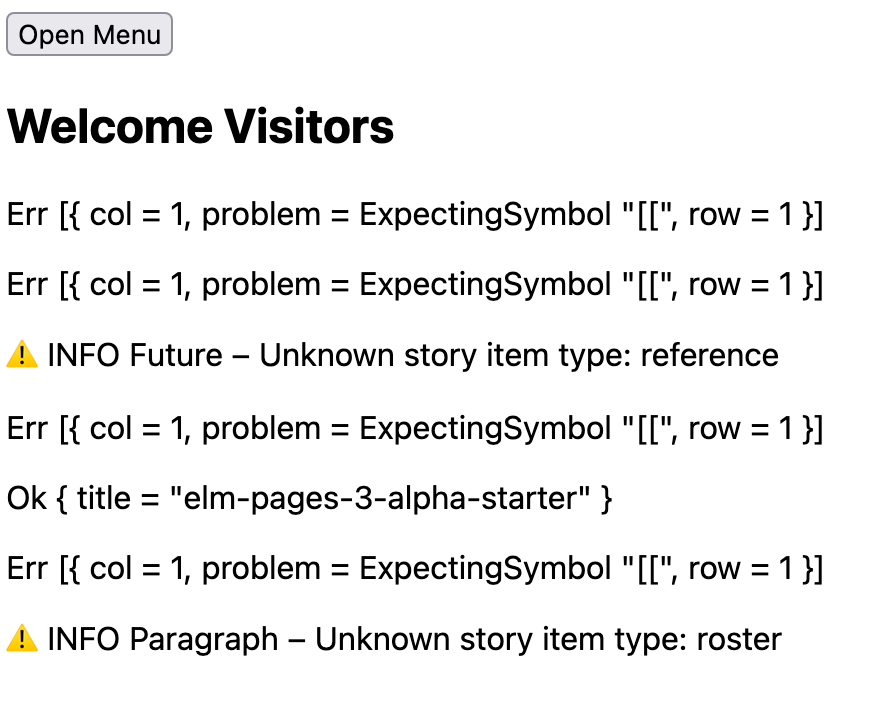

We get an error message (see the following figure) stating that the expectation of finding the doubled square brackets at the beginning of the paragraph was disappointed.

Expecting Symbol

We come back to the proposal to use the parser function oneOf.

From given parsers one may construct others using combinators. Thus the top level parser is constructed using the oneOf parser function, which takes a list of parsers as input, applies each in turn, returning on the first parser to succeed, otherwise finishing with an error. code ![]()

{-| Production: $ LatexExpression &\Rightarrow Words\ |\ Comment\ |\ IMath\ |\ DMath\ |\ Macro\ |\ Env $ -} latexExpression : Parser LatexExpression latexExpression = oneOf [ texComment , lazy (\_ -> environment) , displayMathDollar , displayMathBrackets , inlineMath ws , macro ws , smacro , words ]

Notice the close correspondence between the production for the nonterminal symbol LatexExpr and the construction of the parser: reading the right-hand side of the first is like reading the argument to oneOf from top to bottom. video ![]()

> Reading across the right-hand side of the production is like reading down the code for the parser. So there there's an intimate relationship between these production rules and parser combinators.

See words in Parser.elm: code ![]()

{- WORDS AND TEXT -} words : Parser LatexExpression words = inContext "words" <| (succeed identity |= repeat oneOrMore word |> map (String.join " ") |> map LXString ) {-| Like `words`, but after a word is recognized spaces, not spaces + newlines are consumed -} specialWords : Parser LatexExpression specialWords = inContext "specialWords" <| (succeed identity |= repeat oneOrMore specialWord |> map (String.join " ") |> map LXString )

and in ParserHelpers.elm: code ![]()

word : Parser String word = (inContext "word" <| succeed identity |. spaces |= keep oneOrMore notSpecialCharacter |. ws ) -- |> map transformWords {-| Like `word`, but after a word is recognized spaces, not spaces + newlines are consumed -} specialWord : Parser String specialWord = inContext "specialWord" <| succeed identity |. spaces |= keep oneOrMore notSpecialTableOrMacroCharacter |. spaces

notSpecialTableOrMacroCharacter : Char -> Bool notSpecialTableOrMacroCharacter c = not (c == ' ' || c == '\n' || c == '\\' || c == '$' || c == '}' || c == ']' || c == '&')

~

With this site I am participating in and learning from at least one wiki dojo. I'm happy to join more.

The owner of this site can create new sites as subdomains of this site. The simplest option is to create the new site with the same owner and login information as used here.

We can disable automatic creation of new sites in a farm by setting server config parameters. Now we explore possible automated workflows that will replace this convenience.

martech ⇒ Michael Martin

martech.dojo.fed.wiki

The Crazy Wiki Place page ![]() – assets/home/index.html

– assets/home/index.html

register.js is stored in the assets/home folder and deployed as a node.js application.

~

How to build interesting parsers page ![]()

> Yes, good! But I don’t want to run the parser at the beginning of the source string. I want to run it at the current parser position.

It’s possible to implement a negative lookahead parser in the same way, but the resulting code wouldn’t be easily recognizable as the inverse of the above (positive) lookahead parser. It would be nice to have a common code structure like an if ... then ... else ... with the branches flipped for the negative lookahead parser.

[…] “getResultAndThen” because we determine the Result of the Parser.run 1 function, “getResultAndThen” like the other “getXxx” functions in the elm/parser package which return information without affecting the current parser state, and “getResultAndThen” because the signature looks similar to a flipped version of Parser.andThen:

flippedAndThen : Parser a -> (a -> Parser b) -> Parser b getResultAndThen : Parser a -> (Result (List Parser.DeadEnd) a -> Parser b) -> Parser b

negativeLookAhead : String -> Parser.Parser a -> Parser.Parser () negativeLookAhead msg parser = getResultAndThen parser <| \result -> case result of Ok _ -> Parser.problem msg Err _ -> Parser.succeed ()

As you can see, it has exactly the same structure as the (positive) lookAhead parser, with both cases flipped (more or less).

YOUTUBE M9ulswr1z0E "Demystifying Parsers" by Tereza Sokol

~

Sociotechnical system design for "digital coal mines" linkedin ![]()

VIMEO 861989662 Sosioteknisk systemdesign for “digitale kullgruver” - Trond Hjorteland