This is not the usual kind of computer science you might have learned in a compiler course, because it's the other way around: you have the text first, and then you write the grammar that fits the text, not the other way around.

Parser Stats automatically and incrementally reported with each parse

A Parser reads text to discover structure and meaning. For example, a C language parser can read a C program and understand in a real sense everything that the program has to say. Contrast this to a pattern matcher...

Parser generators have traditionally been used to describe exactly what should be written and anything else is a "syntax error". Wiki allows writers to write what they think makes sense. With Exploratory Parsing we now have a way for the parser writer to discover what has been written after the fact.

> This Inversion of Control mirrors the original thinking behind wiki. Let those who know write as they see fit. Trust people to be regular enough to create lasting value. Use the power of our modern computers and networks to organize that value. github ![]()

See blog post announcing the open-sourcing of this technology. github ![]()

YOUTUBE HCmrVp8WR6c SAO's video of Ward's performance

This was a web based experiment manager that could prepare, monitor, and refine task specific grammars turned into engines with a slightly enhanced version of Ian Piumarta's peg/leg. site ![]()

Transcript

Okay take out your phone. I (Ward Cunningham) got a QRCode at the end of my talk and you'll want to take a shot of it.

I'm serious i don't see anybody working on that so i want tonight to describe to you an open source project that you can use today to parse. It's a tool to parse, to extract data from and a methodology for extracting data from semi-structured text. So semi-structured text is the stuff with patterns in it but you don't know the patterns. People make this stuff they try to make it regular but they only do so well. It's not quite natural language or it's not anything overly specified.

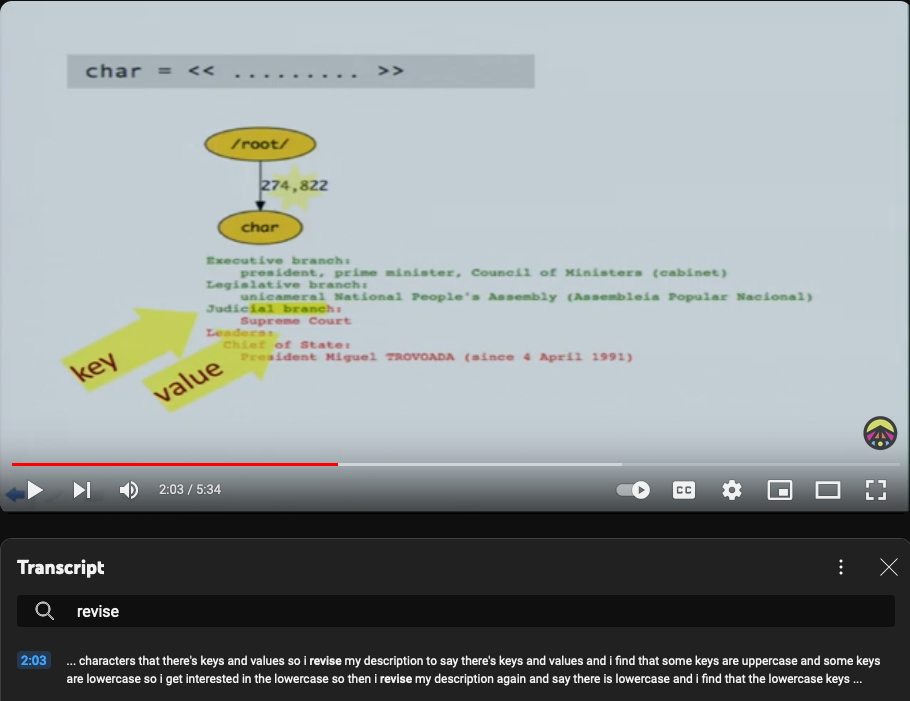

The methodology is simple video ![]() . It says parse what you expect to find and look and see what else you miss. So you get from your own expectations you get to find stuff around it then you iterate through that as fast as you can. Here's an example of parsing this as a Fact is a key and a value or some other characters.

. It says parse what you expect to find and look and see what else you miss. So you get from your own expectations you get to find stuff around it then you iterate through that as fast as you can. Here's an example of parsing this as a Fact is a key and a value or some other characters.

~

We are representing physical Containers by delimiting Boundaries and basic facts by empty Containers.

~

So this is a using compiler tools but they're special compiler tools i also support it with some visualization where i show you what you got if i ran that through a file and found nineteen thousand keys i might wonder what they look like so i have samples here's the tool this is a web application i can run it off of a ec2 or something if that's where my data is and i run huge jobs 87 gigabytes in this case it's fast for a variety of reasons

Let me just walk through this to give you an idea what it's like to develop. Here I start with a saying well i'm looking for characters and dot means any character so let's look for some i see when i find some characters that there's keys and values.

Revise the description video ![]()

So I revise my description to say there's keys and values and i find that some keys are uppercase and some keys are lowercase. So i get interested in the lowercase so then i revise my description again and say there is lowercase and i find that the lowercase keys have just a couple of different values except when they don't so then i revise my description again to say there are familiar values for the lower case and there are some unfamiliar ones and when i do that i find that all but 77 of the keys in this whole data set are these uh unfamiliar lowercase keys so this one probably isn't even really a key.

But you know this is uh this is how quickly i can find out what's in a file i'm following my own interest driven by the actual text in these files the files can be huge i have this tool which manages my experiments because i'm going to follow my nose i might have to back up i might have to explore elsewhere.

This is why it's so fast this is the actual parsing machine does it all with pointers it never moves around a string i can parse as fast as cat can copy files so another thing that makes it fast is this iterative methodology where i can get to useful results very quickly i can parse all of wikipedia in under an hour but more importantly i can parse a useful subset of wikipedia for making these kind of decisions in five seconds.

Now this isn't the ordinary kind of computer science that you might have taken in a compiler class because it's backwards you have the text first and then you write the grammar to match the text not the other way around this is new because it used to be in compilers we'd use regular expressions for recognizing things and then a parser for organizing the result here this is the original wiki code it has a bunch of regular expressions and then it's got pearl coat around it to manage the nesting this is what i can do today i can have the look ahead the regular expression sort of thing and the nesting the parser generator sort of thing all mixed together.

This is called a PEG: Parsing Expression Grammars. It was developed by Bryan Ford and uh at mit and i'm using the implementation called the peg leg done by ian pimarda uh 2004 2007. this is recent stuff this is a blog post i wrote about this when we released this into the open source this year i'm Ward Cunningham by the way and i think you ought to read this post.

Just to make it easy i attacked on this qr code you should all take a picture you know this also has an address there for people who use pencils and i want to thank you for your attention.

I do have a little time left so i thought i'd give you some coming attractions this isn't the only open source work i do here a couple other projects that i hope to show you in future tech ignites i think i'll this will be remembered as my vertical period and uh these are also up there in github. So thank you very much.

~

Developed sediment inspired uniform sampling algorithm. Add elements at period p until sediment reaches limit. Then decimate the sediment by 1/2 and 2x the period. github ![]()

See related Reservoir Sampling which is happy to be uniformly random but not evenly spaced. wikipedia ![]()

Ward's approach here was informed by his positive experience with XML Import in Ruby.